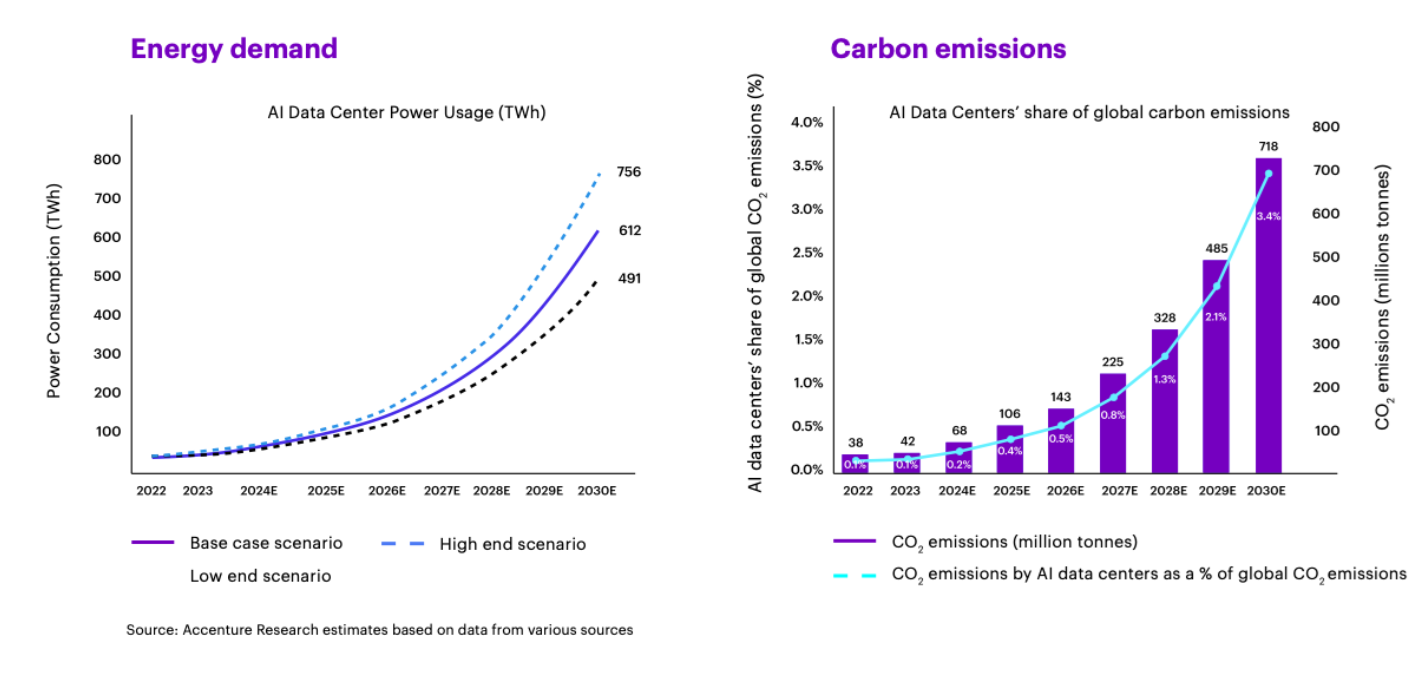

The environmental impact of artificial intelligence is rapidly becoming one of the most pressing challenges of our time. By 2030, according to our estimates at Accenture, AI’s energy consumption will grow over 10x, reaching 612 tera-watt hours (TWh) annually—the equivalent of Canada’s total electricity consumption. This significant strain on global power grids will see AI’s carbon emissions surge 11-fold to 718 million metric tons by 2030, accounting for 3.4 per cent of global emissions (up from 0.2 per cent today). Simultaneously, AI hubs are projected to consume over 3 billion cubic metres of water every year that is not returned to original sources due to evaporation and other losses. That’s more than the total annual freshwater withdrawals of countries like Norway or Sweden.

Simply put, the technology that promises significant productivity and revenue growth could also undermine the very sustainability goals businesses are trying to achieve. This is the AI “efficiency paradox.” And with only 16 per cent of the world’s largest companies currently on track to meet their net-zero goals, the problem appears on track to get worse. There is, however, a solution, which is not to slow down AI adoption but to get smarter about how we scale it.

Businesses can shape a better, more sustainable future, while unlocking significant cost savings, enhancing their brand reputation, and attracting sustainability-focused investors. But to make this transformation possible they must adjust their AI architectures, operations, and sustainability standards to reduce their systems’ energy consumption, water use, and carbon emissions.

A precedent for such progress already exists. From 2010 to 2018, data centres boosted their storage and computing power by 500 per cent, but energy consumption rose by just 6 per cent. This didn’t happen by accident—it stemmed from businesses scaling computing while cutting energy waste, costs, and carbon impact. If the trend from 2005 to 2010 had persisted, the rise in energy consumption between 2010 and 2018 would have been more than 450 per cent.

As our modelling indicates, if the projected power consumption per graphic processing unit (GPU) increases at a slightly slower pace of 3 per cent annually and the energy efficiency (measured by power usage effectiveness) and utilization is improved to U.S. levels across the globe, the energy consumption of AI data centres can be reduced by 20 per cent to 491 TWh in 2030 (see Figure 1). This optimization alone can cut AI’s projected 2030 energy consumption by 121 TWh—an energy saving almost equivalent to Norway’s total electricity use in a year. However, if the projections err on the other side by a small margin, then the number may shoot up 24 per cent to 756 TWh.

Figure 1: AI Data Centre Power Usage and Carbon Emissions

Addressing the AI “efficiency paradox”

Companies can successfully scale AI and significantly reduce its environmental impact by taking the following five strategic actions:

- Putting smarter silicon to work

- Unlocking scaling efficiency with algorithmic design

- Decarbonizing data centres with an edge computing-focused strategy

- Taking cross-organization approach to sustainable AI

- Collaborating to embed AI governance as code

Below, we detail these actions and how businesses can apply them.

Putting Smarter Silicon to Work: AI still runs on computing architectures never designed for it. Nobel Laureate Geoffrey Hinton, a pioneer in deep learning, warns that current “immortal computing” architecture—where software outlives hardware—creates inefficiencies that AI amplifies. AI’s constant, large-scale memory access needs co-optimized software and hardware.

Consider a small AI system with 1,000 input neurons and 1,000 output neurons: it requires one million weight parameters (1,000 × 1,000), each continuously retrieved and updated during operations. As AI models scale to billions of parameters, compute requirements grow exponentially, placing an immense strain on processing power. Data movement between memory and processors further diminishes performance.

AI’s next performance and efficiency breakthrough won’t come from hardware or software alone, but from building them together with what we call “smarter silicon.” By co-designing hardware and software, companies can eliminate inefficiencies, cut costs, reduce carbon footprints, and enable faster, more scalable AI solutions.

For example, smarter silicon for “in-memory processing,” which entails processing data directly in memory, instead of transferring it back and forth between traditional storage and processors, offers a powerful solution by minimizing unnecessary data transfers and enabling computation where data resides. Enterprise software provider SAP, with its in-memory database SAP HANA, has proved this concept in enterprise applications, reducing query times from hours to seconds while slashing energy consumption.

Similarly, in 2021, Samsung Electronics’ processing-in-memory technology resulted in up to 85 per cent savings in data movement energy use by integrating AI semiconductors into high-bandwidth memory. Instead of merely scaling GPU clusters, organizations can evaluate these emerging AI architectures—using vendor-specific AI libraries and hardware-aware AI techniques—to reduce energy costs and optimize real-time AI applications like fraud detection and autonomous trading.

Analog computing offers another energy-efficient alternative by directly manipulating physical quantities like voltage or current for mathematical operations. Fabless semiconductor start-up Mythic’s compute-in-memory approach, for example, has been shown to slash AI inference power use by 20x in edge devices by processing data directly where it’s stored. This makes a critical difference in power- and heat-constrained environments, such as smartphones, Internet of Things devices, and industrial automation.

Parallel processing—with AI tasks split into smaller operations and run simultaneously across multiple processors—is another approach to reduce energy use. Recent academic research shows that hardware–software co-design, combining parallel processing with compute-in-memory architectures, improves energy efficiency by 16 to 36 times over traditional GPUs, without loss in accuracy.

Perhaps the most dramatic shift comes from custom AI chips. Semiconductor manufacturer Advanced Micro Devices is redefining chip design with modular, chiplet-based architecture, allowing businesses to integrate specialized AI chiplets directly into processors to optimize them for specific needs. This means that AI models can be fine-tuned to run more efficiently on custom silicon, rather than forcing software to conform to pre-designed hardware constraints.

Further optimization is possible through the monetization of excess or underutilized GPU capacity already in place, aligning economic incentives with sustainability commitments. For example, blockchain-powered GPU marketplaces allow buying and selling excess compute power in real time, helping recoup costs and lower emissions per model trained.

Unlocking Scaling Efficiency with Algorithmic Design: Open-source models such as DeepSeek introduce a new way for businesses to deploy more sustainable and cost-effective AI models at scale. Reportedly developed for US$5.6 million using 2,000 Nvidia H800 GPUs over 55 days, DeepSeek uses 10–40 times less energy than its counterparts.

Several innovations contributed to DeepSeek’s performance efficiency. Traditional deep learning uses 32-bit floating-point precision, where each number is stored using 32 bits of memory. DeepSeek reduced this to just 8-bit floating-point, using dynamic scaling techniques to maintain precision while significantly reducing memory usage and energy consumption.

A cornerstone of DeepSeek’s energy efficiency is the mixture-of-experts architecture, which engages only a subset of parameters—referred to as “experts”—tailored to each input, significantly reducing computational load and energy use.

DeepSeek also introduced a novel training approach for its reasoning model. While it trained the model on a bunch of known problems to produce correct answers, instead of relying on traditional reinforcement learning techniques of fine-grained feedback from AI or humans, it let the model self-adjust its strategy to favour better outcomes. This allowed reasoning to emerge over time through multiple “aha moments.”

Unlike traditional AI models, which process words individually, DeepSeek’s Multi-Head Latent Attention compresses information by focusing on key relationships within phrases and sentences. This uses just 5–13 per cent of the memory previously required, without sacrificing accuracy. DeepSeek also uses Multi-Token Prediction to predict multiple words at once, improving context awareness and response speed.

By significantly reducing inference costs, DeepSeek also makes sustainable AI economically viable (when this article was published, processing 1 million input tokens with DeepSeek-R1 costs just US$0.28, while 1 million output tokens are priced at US$0.42).

Decarbonizing Data Centres with an Edge Computing-focused Strategy: Smartly deploying a distributed computing model like edge computing can balance the needs of high-throughput AI systems with sustainability. By bringing computation closer to the point of transaction or data origination, companies can reduce the amount of energy their AI models need by avoiding unnecessary network transit, lightening cooling loads in big facilities and enabling more efficient hardware upgrades at strategic endpoints.

Companies like Mastercard exemplify this approach. Billions of transactions flow through Mastercard’s “cardiovascular system.” AI plays a crucial role in real-time fraud detection and customer insights, increasing the company’s power demand from data centres. This has led to efforts to manage consumption more efficiently as the company grows.

To address this, the company implemented edge computing to process workloads closer to transaction points, minimizing energy-intensive data transfers and easing the cooling demands on centralized data centres. Initial results show significant improvements, with server upgrades and localized computing leading to noticeable reductions in energy consumption per node. This can, by and large, be thought of as a large edge computing network.

Cross-functional collaboration among developers, data scientists, data centre operators, and senior leadership is critical to this shift. Operations track real-time energy usage of endpoints and share with development teams where server refreshes and new edge nodes can yield the biggest efficiency gains. Using this information, procurement can prioritize devices and components that best meet environmental standards, while developers design and calibrate AI workloads to make the best use of new processing capabilities, adopt carbon-aware scheduling, and optimize for localized inferencing.

Mastercard also developed a guide for technologists, encouraging AI projects to consider carbon cost, hardware usage, and location-based scheduling by design. It partnered with the Green Software Foundation to offer training to more than 6,000 software engineers in sustainable coding and monitoring cloud usage.

Taking Cross-organization Approach to Sustainable AI: Wide-ranging coordination—from data to hardware to customer relationships—can also yield tangible carbon reductions and cost savings. Global leader in logistics Kuehne+Nagel, for example, wrestled to balance scaling artificial intelligence with environmental goals as it rapidly deployed AI to process more than 1.5 billion messages a year to enhance real-time asset tracking, customs processes, and customer service.

The company’s sustainability challenge was amplified by four key factors. First, its traditional data centre setup risked ballooning out of control due to 30 per cent annual growth in transaction volume. Logistics also demanded near-perfect traceability, which intensified the accuracy and computational intensity of AI algorithms. The resulting expansion of GPUs and hardware for AI created a mountain of e-waste and disposal concerns, just as new European Scope 3 emissions rules required AI-driven growth to align with sustainability goals.

To truly unlock AI’s potential, we need to shift from asking, “How powerful is our AI?” to “What are we getting for the resources we’re investing in AI?” This means embedding sustainability as a core principle, not an afterthought.

By taking a cross-functional and cross-organizational view, Kuehne+Nagel focused on three key steps to address this challenge. To start, it used generative AI to clean supply-chain data, which increased accuracy to 95 per cent versus 70 per cent through manual processes. This reduced the costs associated with data cleansing by 95 per cent. More significantly, this AI-led data optimization cut wasted shipments, rework, and warehouse idle times, substantially lowering both energy use and emissions. The net impact was outsized resource savings.

Second, Kuehne+Nagel integrated AI’s Scope 3 emissions into its deployment process by sharing precise analytics and data with customers and partners. For example, providing accurate AI-driven estimates for arrival times helped customers staff their warehouses precisely, slashing overtime and curbing energy wastage. This required collaboration between customer success teams, data engineers, and each client’s planning group.

Finally, Kuehne+Nagel considered extending the life of devices as core to shrinking AI’s embodied carbon. For each additional year of use, the company achieved a 25 per cent reduction in annual carbon emissions per asset, which added up to a significant number across thousands of assets. It partnered with refurbishing firms to resell or donate older machines.

Collaborating to Embed AI Governance as Code: AI sustainability isn’t just a technology problem—it’s an industry coordination challenge. No global consensus exists yet on balancing AI’s compute requirements with its growing environmental footprint. Traditional regulations can prescribe static parameters, but they fail to keep pace with AI’s rapid evolution—or to secure broad industry buy-in.

Instead of relying only on regulations, governments and companies can proactively engage with their ecosystem to shape norms governing AI’s energy impact. Singapore’s Infocomm Media Development Authority (IMDA), for instance, convened cloud providers, data centre operators, chipmakers, and research institutions to co-create a data centre sustainability roadmap tailored to Singapore’s unique constraints—heat, humidity, and soaring demand. This was complementary to the conventional measures of mandating norms like basic power usage effectiveness limits. This early engagement with multiple stakeholders revealed that tropical cooling costs, emergent machine-learning hardware needs, and water scarcity concerns were interlinked.

Operators and IMDA shared data to pilot new solutions, resulting in practical guidelines. One key initiative involved raising temperature thresholds for data centres, offering 2–5 per cent cooling energy savings per degree. IMDA also supported testbeds for dynamically adjusting cooling based on real‑time workloads, decreasing energy use while also collecting performance metrics for iterative improvements. IMDA and Singapore’s Building and Construction Authority also collaborated with industry to revise the Green Mark scheme for data centres’ rating system in order to provide impetus for data centre operators to improve their sustainability performance.

IMDA’s approach ensured that companies became co-authors of these standards rather than mere followers, helping bridge the gap between forward-looking policy and on-the-ground implementation.

This collaborative spirit is also seen in the development of “governance-as-code” tools. For example, Microsoft’s Emissions Impact Dashboard helps organizations measure, monitor, and reduce AI workloads’ carbon footprint through automated policy compliance. Similarly, Google’s Compute Carbon Intensity metric measures carbon emissions per unit of computation, enabling intelligent task scheduling in locations with cleaner energy. These tools provide practical frameworks for embedding sustainability directly into AI operations and decision-making.

The road to sustainable AI

AI sustainability isn’t just a passing opportunity, it’s a pivotal moment for AI, redefining how intelligence is built and deployed. To truly unlock AI’s potential, we need to shift from asking, “How powerful is our AI?” to “What are we getting for the resources we’re investing in AI?” This means embedding sustainability as a core principle, not an afterthought.

Companies that embrace the five actions outlined—put smart silicon to work, deploy AI thoughtfully, decarbonize data centres with an edge computing-focused strategy, use a cross-functional, cross-organization approach, and embed AI governance as code—will not only meet environmental targets but also gain a significant edge in sustainability and position themselves for long-term success. This integrated approach turns AI into a powerful force for decarbonization rather than a driver of emissions.

As AI adoption accelerates, organizations that lead in responsible AI will play a vital part in shaping the next era of AI, where progress and sustainability go hand in hand.

The authors thank Giju Mathew, Subhransu Sahoo, Manisha Dash, and Vibhu S. Sharma for their input.